Speeding up CI with Docker

At Driftrock development team we aim to develop and ship new value and fixes to our customers as quickly and frequently as we can. Important thing that allows us to do so is Continuous Integration.

Recently we started experiencing quite long CI test times - around 10 minutes. We do Pull Request reviews in our team and that means that every time we did small update during PR discussion we had to wait 10 minutes to be able to merge changes or ask reviewer to accept the changes and effectively unblock us to do other work. If we put it in perspective of a working day with 10 pull requests, it makes up 20% of the working time (1:40 hour in 8-hour work-day).

The Problem

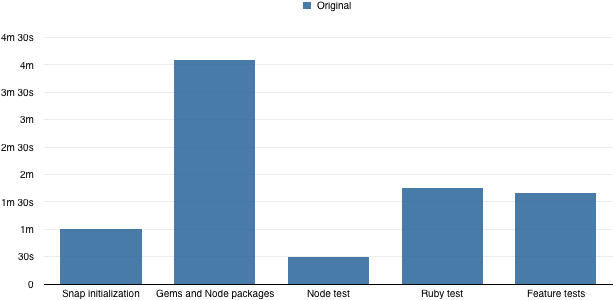

Looking at the build output log we are able to identify that the longest time is taken by Gems and Node packages installation:

The application in this case is a mixed Ruby on Rails and Javascript (Node) app, which requires the installation of both Gems bundle and NPM packages. This is run every time despite the packages do not change most of the times.

There is also some time Snap takes to initialise the build environment - we can do nothing about it. And there is the actual tests time, which perhaps can be improved, but packaging time is bigger problem now.

The Dockerfile

If you are not familiar with Docker ecosystem, let me detour a bit from the main plot to it. In Docker, everything you do, you start with a Docker image. Docker image is a snapshot of an operating system - all its file system structure, CLI tools, shell, and the programs installed in it together with all its dependencies - minus the kernel.

This is incredibly powerful concept that allows you to download and run anything from Ruby shell to Postgresql server using single command. No need to worry about installing all the libraries, solving conflicts and so on that we were used to when running software directly on our OSs.

To create - or build - Docker image, Docker uses files called Dockerfile. This files contains steps - commands - that are run within the image to make new image for the next step and ultimately creating final image that you can give a name and reuse to run your applications.

FROM ruby:latest

# Rerun bundle install only if Gemfile changes

COPY Gemfile Gemfile.lock ./

RUN bundle install -j20

# Copy rest of the source

COPY . ./The example above creates an image containing a Ruby application with installed Gems bundle based on the Gemfile.

The beautiful part of Dockerfiles is that each step creates intermediate image that Docker automatically stores and caches. Beacause each step is deterministically defined by its command, Docker doesn't need to run it again as long as the step doesn't change.

For RUN steps it assumes that as long as the command text is the same, its output and all side effects it does are the same. More interestingly, for the COPY steps it reads the content of the specified files, computes hash fingerprint of them and as long as these files don't change its content, it uses the cached image.

In the example above this behaviour is leveraged to run the bundle install step only if the Gemfile and Gemfile.lock file actually change. If it doesn't change, we can use cached version and so speed up the build. Because it uses file content hash - not file modification time, it doesn't make any assumption about the content, etc. - it always reliably invalidates the cache when it is needed, removing the worry about using invalid state of cache or so that we often fear when hearing about caching.

The Solution

Snap CI recently introduced beta support for Docker in their build stack. Knowing about advantages of Docker caching of images we decided to give it a go.

The plan is:

- Build Docker image containing Gems and Node packages and current build source files using Dockerfile with step copying only the Gemfile and package.json before running bundle install && npm install

- Leverage caching so each build would only add new source files but not rerun the bundle if the dependencies don't change

- Run the tests using the built image

So we made this Dockerfile and use it to build the image within the build script:

FROM ruby:2.3.1

COPY Gemfile Gemfile.lock package.json /usr/src/app

RUN npm install && bundle install

COPY . /usr/src/app

When we did this however we found is that docker build command on Snap unfortunately doesn't use the cache as it does on local machine. It just always runs all commands again and again. Perhaps because the build is run every time in different node or Snap simply cleans Docker files before each build run.

Manual hashing

If we cannot use the caching build in Docker command, nothing prevents us to mimic it and implement it ourself. So we decided to do so and change the plan to:

- Build base image containing the Gems and Node packages (without build source files) only once

- Store that image in image repository tagged with hash of Gemfile and package.json files

- Try to download the image in subsequent builds

- Create new image adding the current build source files

- Run the tests using that new image

This is the script we ended up with:

# hash of files that can influence dependencies

PACKAGE_SHA=$( cat Dockerfile-package-base Gemfile Gemfile.lock package.json | sha256sum | cut -d" " -f1 )

BASE_IMAGE=repository.example/driftrock/app:base-$PACKAGE_SHA

# download image with dependencies if exist, if not build it and push to repo

docker pull $BASE_IMAGE || ( \

docker build -t $BASE_IMAGE -f Dockerfile-package-base . \

# push to repository for next time

&& docker push $BASE_IMAGE

)

# tag locally to constant name so it can be used in Dockerfile-test

docker tag -f $BASE_IMAGE repository.example/driftrock/app:base-current

# build test image from the app:base-current - it adds the current source files to the base image

docker build -f Dockerfile-test -t app-test .

# run tests within created image

docker run app-test ./scripts/run-tests.sh

With Dockerfile-package-base only installing dependencies:

FROM ruby:2.3.1

COPY Gemfile Gemfile.lock package.json /usr/src/app

RUN npm install && bundle install

And the Dockerfile-test only adding the current source files on top of the base image:

FROM repository.example/driftrock/app:base-current

COPY . /usr/src/app

Inspired by how Dockerfile caches COPY commands based on hash of the content of the files, we compute same hash manually. Then we try to download image named with this hash. If it doesn't exist - i.e. if the content of the respective files is new - we build the image and store it in our private Docker images repository with name containing the hash of the files.

We then build extra image app-test by adding the current source files to the base image we just downloaded (or built). This only stays locally for the particular build - as we don't deploy Docker images yet. Finally we run the tests within this image.

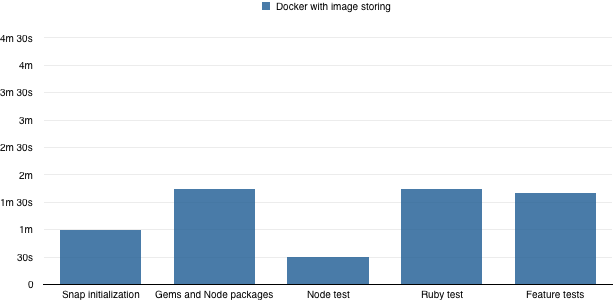

Here are the results:

In the “Gems and Node packages” part we traded the actual installation time for time for downloading the stored image from the repository.

Moving our Snap CI builds for this application to Docker and leveraging image repository to store images with bundle we were able to reduce the build time from ~9 minutes to ~6 minutes.

Next steps

There is still some time that downloading the image takes. This time mainly depends on the size of the image. If we are able to reduce the image size by cleaning the packages amount or optimizing the Docker image by removing unnecessary binaries, this will speed up further.

We still have room to optimise tests itself and to run the independent test suits in parallel, which can save at least another 1:30 minutes.

Conclusion

Even if we don’t use Docker to actually run our apps in production, Docker significantly improved our CI.

Moving most of the build run to Docker environment, we also removed dependency on the build environment and possible vulnerabilities to broken dependencies etc. This allow us to be less dependent on particular CI/CD tool which allow us to faster and less costly move to better providers or switch in case of outage.

We proved that using Docker is beneficial in CI environment both from strategic point as well as efficiency. We will be moving another parts of build pipeline to docker to reduce either time or dependency on the specifics of the build tool we use - in our case Snap CI.

Overall this change is significant improvement to our CI approach and we will be rolling out that to the rest of the Driftrock apps.

It is worth to mention that our problem was long dependencies installation. Perhaps if your application has less dependencies or its installation takes insignificant time compared to the rest of the build time, this approach may not benefit you.